Technical Analysis of an io_uring exploit: CVE-2022-2602

In a landscape where data is among the most important assets for any company, particularly in the IT sector, ensuring its security is a point of paramount importance. For this reason, research in this area has developed through parallel approaches, including hacking competitions, of which Pwn2Own represents the greatest exponent in terms of fame and attractiveness, from the perspectives of both experts and vendors.

This article aims to address part of my internship at Betrusted, part of the Intré Group, where I approached the vulnerability industry through the Pwn2Own case study, focusing on three key aspects:

- An overview of the contest, starting from its inception, the evolution over the years, and its impact on vendors,

- A second part showing the importance of white-hat research, particularly through contests, for both consumers and vendors,

- A final step where I reproduced some of the attacks from recent competitions, as to make an analysis of what happens under the hood.

The attacks were chosen for their representative value, since each of them uses different strategies to achieve results that may be more or less relevant from a security standpoint, i.e., reading or writing memory areas that would not be accessible otherwise. Depending on the context, it is also possible to reach LPE, execute specific commands or cause the application to crash. It is therefore interesting to explore each of the attacks as they all highlight different ways to exploit a vulnerability in multiple contexts, while still following the common thread of their possible use in real-world contexts.

The part I’m going to present is related to CVE-2022-2602, a vulnerability in the Linux kernel that allows LPE by adding a new entry to the /etc/passwd file.

I also explored the details of CVE-2024-0582 affecting io_uring, the same subsystem as the one involved in CVE-2022-2602. After reading some writeups about it, I found it to have many points in common with what I was studying. Thus, I further highlight how much the analysis of previously found vulnerabilities can help both identify new vulnerabilities and defend against them based on techniques previously adopted in similar cases.

ZDI Advisory Details: https://www.zerodayinitiative.com/advisories/ZDI-22-1462/

The vulnerability discussed is intended to showcase how unauthorized access to memory areas can also bring even more critical problems such as local privilege escalation (LPE), thus providing the attacker with the maximum privileges obtainable within a system starting from a normal user.

Since the attack in question involves multiple components of the Linux system, it is first necessary to understand how the different parts work together. Although they are apparently independent from each other, all the elements will play a crucial role within the exploit.

Use-After-Free (UAF)

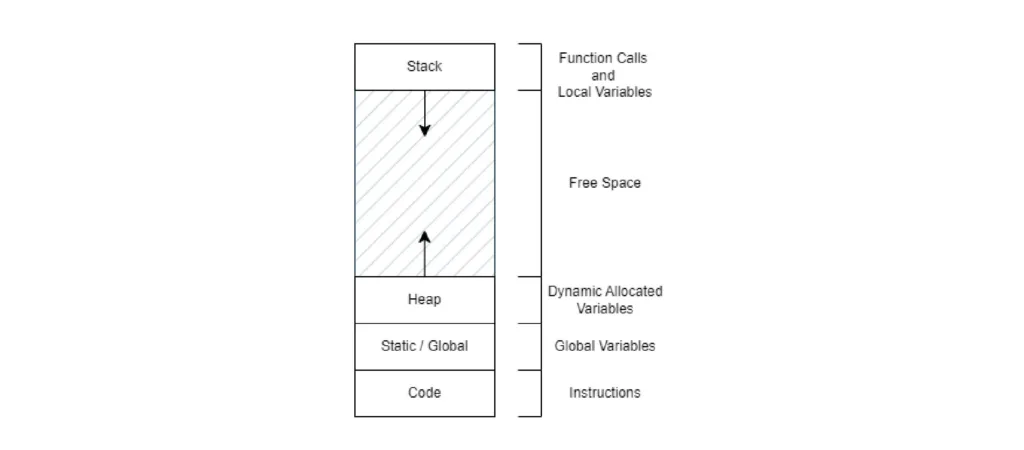

A Use-After-Free (UAF) security issue can occur when a pointer keeps being used after the underlying memory area has been freed. This situation can lead to unexpected behavior including crashes, data corruption or, in the worst cases, execution of malicious code. The only affected memory area is the heap, given how allocation and deallocation of variable size blocks is handled depending on the program needs.

It follows that after freeing up a memory chunk, if the pointers are improperly managed, an attacker could manage to exploit them for their own purposes.

I wrote a simplified implementation of the heap allocator to further illustrate this concept.

To keep track of the state of the various blocks, a heap header is defined at the beginning of each block containing the respective information such as size, state, or pointers to the previous and next blocks. This information is used by other programs to:

• Identify areas that can be allocated without overwriting those used by other processes,

• Make sure not to deallocate memory areas already allocated by other processes,

• Identify the limits of each block, so that different blocks can be merged in case more space is needed.

// Structure for block header

typedef struct block_header

{

size_t size; // Block size

struct block_header *prev; // Previous header pointer

struct block_header *next; // Next header pointer

int free; // Flag free block (1) or busy block (0)

void *self; // Data block pointer

} block_header;

#include <stdio.h>

#include <stdlib.h>

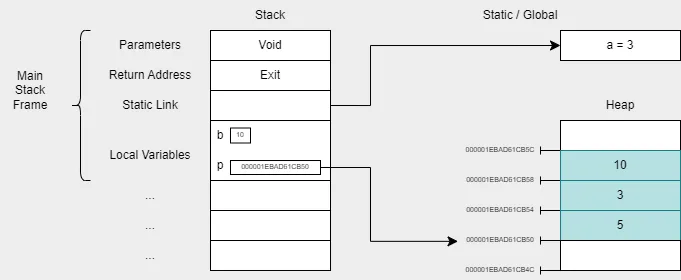

int a;

int main() {

int b;

int *p;

p = (int *) malloc(3 * sizeof(int));

p[0] = 5;

p[1] = 3;

p[2] = 10;

a = p[1];

b = p[2];

printf("The value %d has address: %p \n", p[0], (void *)&p[0]);

printf("The value %d has address: %p \n", p[1], (void *)&p[1]);

printf("The value %d has address: %p \n", p[2], (void *)&p[2]);

free(p);

}

// OUTPUT

// Value 5 has address: 000001EBAD61CB50

// Value 3 has address: 000001EBAD61CB54

// The value 10 has address: 000001EBAD61CB58

At the end of the execution, the heap pointer retained in memory points to the starting address of the allocated memory area, since subsequent ones can be accessed by adding an offset depending on the size of the variables preceding them. The colored part in the figure shows the allocated blocks, their contents and pointer values.

Memory state in the program’s context

The problem arises when a block is deallocated, but its pointer isn’t nulled out and keeps pointing to that same address, constituting what is called a dangling pointer. Since the space has been deallocated, other processes can reallocate it to insert completely different types of data within the same area. If the attacker succeeds, they can use the dangling pointer to store arbitrary data behind it and then subvert the program flow for harmful purposes. Think about function pointers being overwritten by a user-controlled payload.

In some languages such as C, the handling of pointers is left to the abilities of the programmer who must concern with assigning the NULL value to all pointers after deallocating the relevant memory area. He is also entrusted with verifying that the handling has been done correctly. If this does not happen, the pointers will still be available for later use.

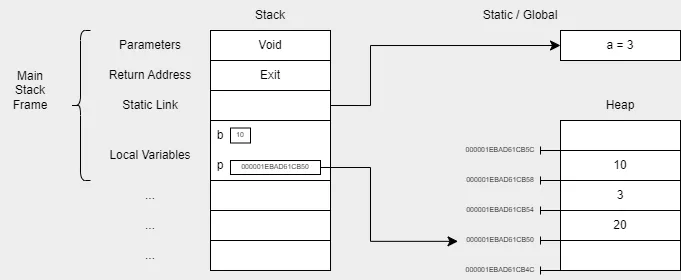

p[0] = 20;

printf("The value %d has address: %p \n", p[0], (void *)&p[0]);

// OUTPUT:

// The value 20 has address: 000001EBAD61CB50

Overwriting a dangling pointer

The p pointer has thus become a dangling pointer to the now deallocated memory area. As shown in the code and in the image, it is then possible to access one of the variables previously in that memory area and modify it without errors of any kind being generated.

File Descriptors and Garbage Collector

Within UNIX systems, input and output (I/O) operations are performed by system calls such as open(), close(), read(), write() or lseek(). The same functions are also used to perform I/O operations on all file types and devices; the task of translating application requests into the appropriate operations is then delegated to the kernel.

To manage open files, file descriptors (fds) are used, which are non-negative integers containing the index of an array that is responsible for keeping track of all the files opened by each process and the related information. Among them are several counters such as reference counts that keep track of the number of processes that have opened that specific file. At the time of its creation, each process has three default file descriptors associated with it:

- Standard Input (0): file from which the process receives input, can also be a device such as a keyboard.

- Standard Output (1): file to which the process writes outputs, such as the terminal that created it.

- Standard Error (2): file where the process writes error information during execution, such as a terminal or log file.

All file descriptors can be redirected as desired. Other fds are usually obtained after executing an open() having as parameter the path to the file you want to open. The return value will match that of the fd.

More specifically, as shown in the figure, each process maintains the pointer to its File Descriptor Table (fdt) in its task_struct, which contains the struct file array that can be referenced by file descriptor number, and each entry is a pointer to a struct file representing the open file.

Each open file is represented by a struct file in the kernel. This structure contains details about the open file, such as the current file offset, file operations, and a pointer to the inode representing the file on disk.

The file struct holds a reference to the inode struct, which represents the actual file in the filesystem and contains metadata like file size, permissions, and ownership.

Given the complexity of the kernel heap and the need to optimize space, a garbage collector (GC) is used to manage the various memory zones within it. Specifically, the GC uses some information, including that just discussed, to delete objects that are no longer used, have no more references, or to make memory areas contiguous after deallocation.

One method to detect whether an object can be deleted is to check its reference count (RC), which represents the number of active references to it from external objects. If this value is zero, then the corresponding memory area can be freed. However, there are some more complex situations in which objects in memory cannot be deleted, since they have RC greater than zero, and at the same time will never be reduced, since the processes that had opened them have already terminated without performing that operation. This deadlock situation gives rise to what are called unbreakable cycles.

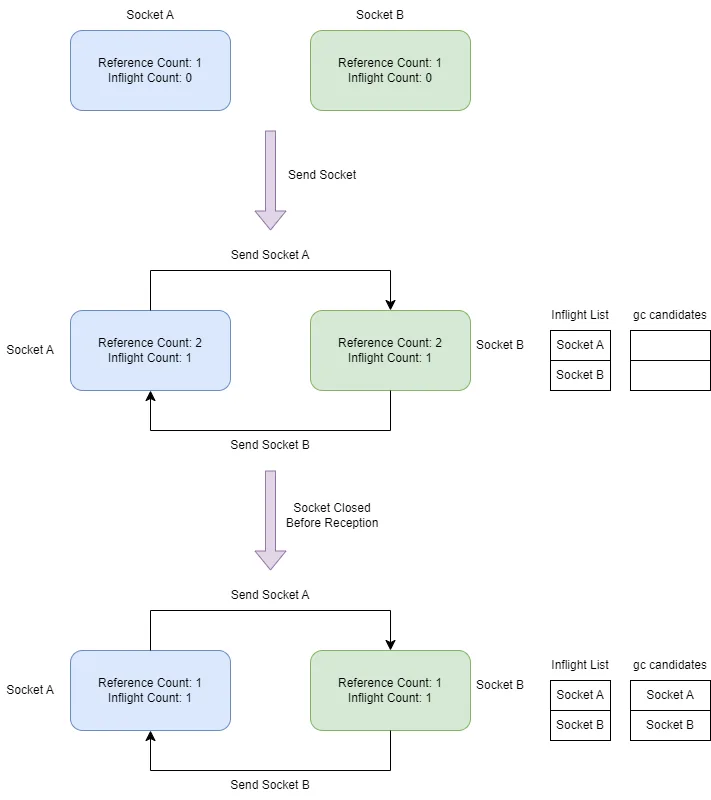

This situation can occur, for example, when you have two sockets passing their descriptor files to each other via system calls such as sendmsg, using SCM_RIGHTS datagrams. The standard situation is when the reference count relative to each socket is incremented upon sending and decremented after receiving. The problem arises when sockets are closed after sending, but before receiving. In this case, both reference counts will be increased by 1, but it will not be possible to decrease them since reception will never occur.

However, the garbage collector is also able to handle such situations by implementing a list called gc_inflight_list containing unix_socks that have been sent, whose RC has been incremented, but which have not yet been received. The specific number of inflights is also kept track of for each file descriptor, so that even unbreakable cycles with more than two sockets can be handled. If at any given time for a socket the inflight count is equal to the reference count, then it is placed on the list of possible candidates for elimination by the garbage collector (gc_candidates).

To figure out whether a socket present among the candidates is within an unbreakable cycle or not, the garbage collector also checks whether there are objects outside gc_candidates that still have an active reference to it:

- If at least one external object has it, then the socket is removed from the list, as its RC is yet to be decremented.

- If no external object has an active reference, the socket is deleted by the garbage collector.

All these garbage collector-related operations are performed in UNIX by the unix_gc function.

io_uring

Until recently, Linux performed I/O operations through various interfaces such as AIO, which was responsible for performing them asynchronously, but with poor performance and inefficiencies for many applications, especially when a large number of file descriptors came into play. To address this problem, starting with version 5.1 of the Linux kernel (2019), io_uring was implemented, an API that allows communication with the kernel to perform I/O while also being able to optimize the execution.

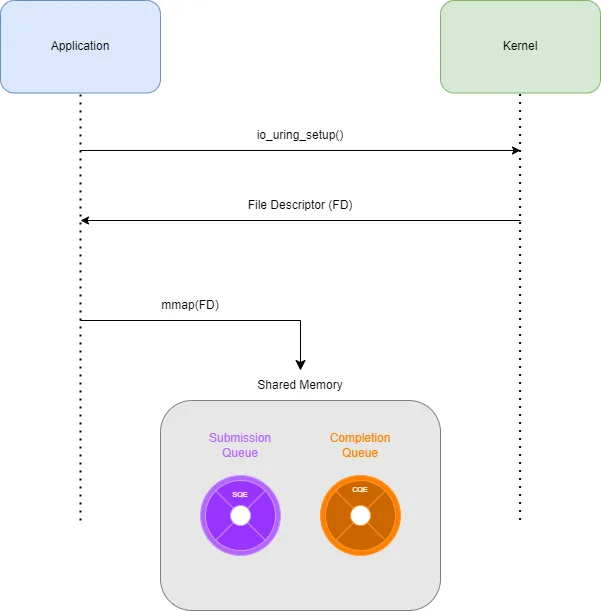

The major difference that allowed it to make the process more efficient and implement features that were not possible before is the use of two circular buffers (ring buffers) shared between kernel space and user space, each with a different task:

- Submission Queue (SQ): contains I/O requests yet to be processed,

- Completion Queue (CQ): contains the requests processed and completed by the kernel.

Thanks to them, operations are faster since their contents do not have to be copied each time from user space to kernel space (zero-copy) and since they are used as an additional method to classic system calls, which can still be performed normally. Additional features include the possibility of polled I/O on the kernel side as well, so that the kernel automatically picks up queued elements without invoking a system call to send them over.

To configure io_uring, a preliminary preparation step is required through some system calls, as shown in the figure:

- io_uring_setup(): creates the context in which the API will be used, specifically initializing the submission queue and completion queue buffers with at least one entry each. This call returns a file descriptor used to perform subsequent operations,

- mmap(): maps the SQ and CQ buffers as a link between kernel space and user space giving rise to a shared memory area, so that other system calls won’t be necessary later on.

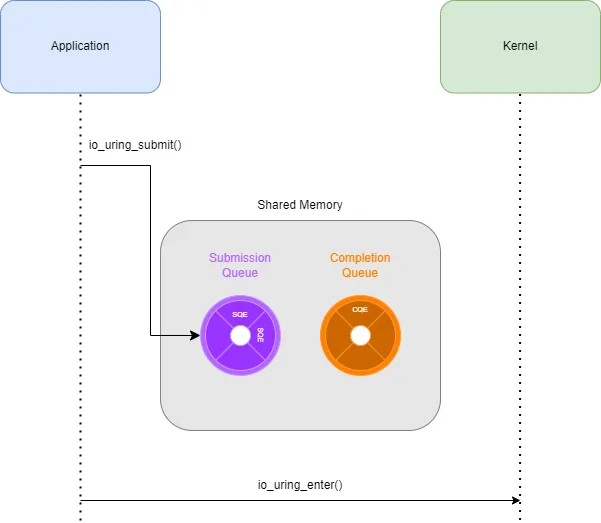

At this point, when you want to perform an I/O operation, you simply create a submission queue entry (SQE) containing information about the operation desired by the application and insert it into the SQ via a call to io_uring_submit. After placing one or more entries inside the buffer, there are two possible outcomes:

- If polled I/O has been enabled, the kernel will periodically check on the SQ and, if it finds an entry, it will start executing the requested operation. This mode is recommended if you want to process requests just after entering them into the SQ, as it avoids a new system call for each entry,

- If not enabled, any number of entries can be added to the SQ. When you want to start processing SQEs, you invoke io_uring_enter to tell the kernel to fetch entries from the SQ like so:

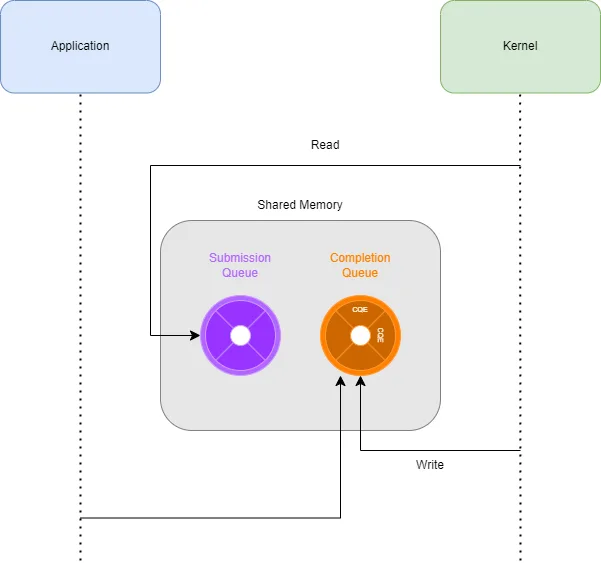

The kernel at this point will have obtained all the entries containing the operations it needs to perform from the SQ. It’s important to note that the kernel will receive requests in the same order as they were sent, but this does not imply the same for task execution and completion, since they are executed concurrently. Also, some requests may be queued up because of their need for resources already in use by other tasks. When the kernel completes a request, it creates the corresponding completion queue entry (CQE) within the completion queue.

The application can then obtain the results of the required operations from the CQ, these fields in each CQE are of most interest:

- user_data: returns the same data received in the related request. It is used to identify which SQE this entry corresponds to,

- res: contains the return value of the system call equivalent to the operation performed by the kernel. In case of execution issues, it returns the error code.

To get data from entries, io_uring_peek_cqe is called.

The use of io_uring is often combined with the liburing library, which simplifies its use for the application, as it will only have to communicate with the library that will handle the various queues and communication with the kernel.

As mentioned earlier and discussed in more detail during the attack, the various operations performed within io_uring often make use of file descriptors. Among these operations, it’s useful to focus on io_uring_register, which is precisely responsible for registering various types of resources such as buffers or files directly into kernel space so that io_uring can reuse them later, reducing the costs in terms of time per operation. If you use this syscall to register an fd in the context created during setup, the application will no longer have to keep track of it and can then discard it via garbage collection.

The vulnerability

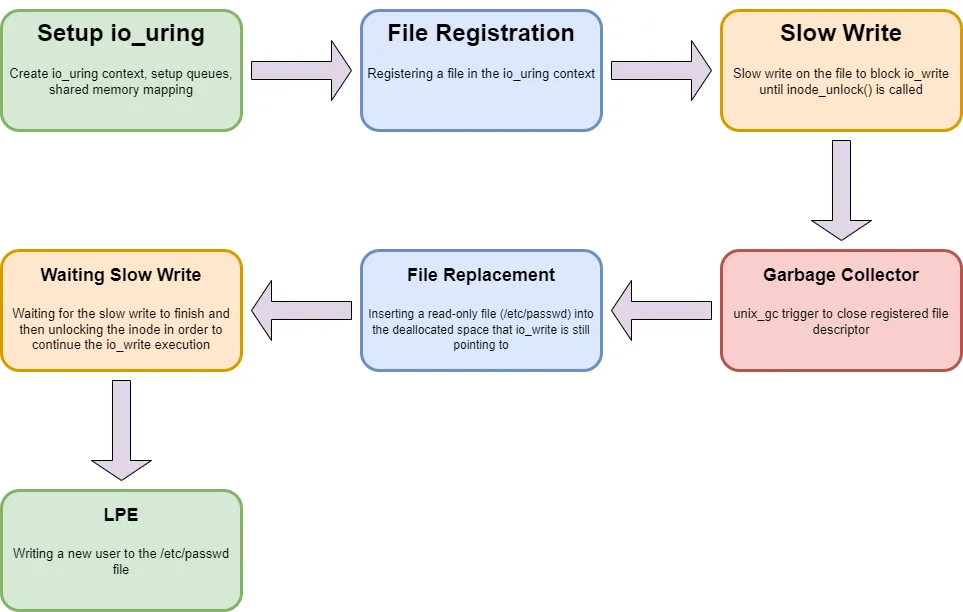

The vulnerability arises from the io_uring context itself, at the point when the garbage collector releases the io_uring context fd obtained during setup, consequently releasing all associated fds. If one of these is still in use by a process that is handling one of the requests, it will reference a freed memory area, causing a UAF. The attacker will thus be able to write to a read-only file.

To achieve this, the attacker tries to block the execution of the file operation, free the file previously registered with io_uring, replace the file structure released by the GC with one of a read-only file and continue execution. Since the write permissions checks only occur before the operation begins, if the replacement is successful, when execution resumes it will be possible to modify it without generating errors. The most straightforward file you want to use as a replacement is the /etc/passwd file, allowing us to add a new user with credentials of our choice, having root privileges and using this new account to perform any privileged task.

One way to pause execution is to use inode locking, a mechanism designed to make write access to a file possible for only one process at a time. However, this mechanism can be exploited by creating a thread that writes to the same target file before executing the write using io_uring, so that the latter cannot terminate until the thread ends. This technique is called slow write and can be performed by generating a concurrent thread that writes a large amount of data to the target file.

Technical Analysis

In this section, I present a technical analysis of the exploit from kiks7 and LukeGix, which implements the techniques just described.

An initial dynamic analysis was done with debugging tools such as strace, which provided information on system calls and signals received during execution. The outputs were cleaned up to present only the most relevant sections.

socketpair(AF_UNIX, SOCK_DGRAM, 0, [3, 4]) = 0

io_uring_setup(32, {flags=IORING_SETUP_SQPOLL, sq_thread_cpu=0, sq_thread_idle=0, sq_entries=32, cq_entries=64, features=IORING_FEAT_SINGLE_MMAP|..., sq_off={head=0, tail=64, ring_mask=256, ring_entries=264, flags=276, dropped=272, array=1344}, cq_off={head=128, tail=192, ring_mask=260, ring_entries=268, overflow=284, cqes=320, flags=280}}) = 5

openat(AT_FDCWD, "/tmp/rwA," O_RDWR|O_CREAT|O_APPEND, 0644) = 6

From this first output, we can see how the setup phase described earlier takes place in practice. After starting execution, the first two local UNIX sockets of type SOCK_DGRAM are created, this means that the messages exchanged will be datagrams and there will be no connection between the two parties. The first 0 in the socketpair call indicates that the default protocol will be used, this is of no particular interest for the purposes of the attack, as opposed to the pair of numbers [3, 4], the fds of the two created sockets. The final 0 is the return value, signaling that the operation was successful.

Instead, on line 2 we find the setup of io_uring and the related data structures necessary for its proper operation, among which we note the specification of the submission and completion queues. The returned fd related to io_uring is 5.

When finished, /tmp/rwA (any file which you have read and write permissions on) is opened to ensure that the same permissions are kept after replacing the fd, which for this file has value 6.

io_uring_register(5, IORING_REGISTER_FILES, [4, 6], 2) = 0

close(6) = 0

sendmsg(3, {msg_name=NULL, msg_namelen=0, msg_iov=NULL, msg_iovlen=0, msg_control=[{cmsg_len=20, cmsg_level=SOL_SOCKET, cmsg_type=SCM_RIGHTS, cmsg_data=[5]}], msg_controllen=24, msg_flags=0}, 0) = 0

close(3) = 0

close(4) = 0

In the io_uring context (fs=5), files having fds equal to 4 and 6, relating to one of the initial sockets and the /tmp/rwA file, are registered. A socket is then sent via sendmsg to fd 3, i.e. the first created socket, containing in its data (cmsg_data) the file descriptor 5, related to the io_uring context. Since they have been registered, it will no longer be necessary to keep track of those open descriptors; to optimize the process, the file and sockets are then closed.

mmap(NULL, 8392704, PROT_NONE, MAP_PRIVATE|MAP_ANONYMOUS|MAP_STACK, -1, 0) = 0x7fdceb01c000

mprotect(0x7fdceb01d000, 8388608, PROT_READ|PROT_WRITE) = 0

[...]

clock_nanosleep(CLOCK_REALTIME, 0, {tv_sec=1, tv_nsec=0}, 0x7ffe38924090) = 0

mmap(NULL, 1472, PROT_READ|PROT_WRITE, MAP_SHARED|MAP_POPULATE, 5, 0) = 0x7fdceb01b000

mmap(NULL, 2048, PROT_READ|PROT_WRITE, MAP_SHARED|MAP_POPULATE, 5, 0x10000000) = 0x7fdceb01a000

A virtual private memory zone to be used by the stack is then created, having a size of 8392704 bytes and initially without read and write protections, which are added soon after. The starting address assigned by the kernel for this zone is 0x7fdceb01c000.

After a few more operations, two additional virtual memory zones of much smaller size are mapped, but this time shared and with protections already set, having 5 as the file descriptor and indicating the shared io_uring context.

io_uring_enter(5, 1, 0, IORING_ENTER_SQ_WAKEUP, NULL, 8) = 1

clock_nanosleep(CLOCK_REALTIME, 0, {tv_sec=2, tv_nsec=0}, 0x7ffe38924090) = 0

munmap(0x7fdceb01a000, 2048) = 0

munmap(0x7fdceb01b000, 1472) = 0

close(5) = 0

clone(child_stack=NULL, flags=CLONE_CHILD_CLEARTID|CLONE_CHILD_SETTID|SIGCHLD, child_tidptr=0x7d0690) = 2200

clock_nanosleep(CLOCK_REALTIME, 0, {tv_sec=2, tv_nsec=0}, {tv_sec=2, tv_nsec=46014}) = ? ERESTART_RESTARTBLOCK (Interrupted by signal)

--- SIGCHLD {si_signo=SIGCHLD, si_code=CLD_EXITED, si_pid=2200, si_uid=1000, si_status=0, si_utime=0, si_stime=0} ---

restart_syscall(<... resuming interrupted clock_nanosleep ...>) = 0

openat(AT_FDCWD, "/etc/passwd", O_RDONLY|O_DIRECT) = 4

In this last step, first we send the SQEs to the io_uring module, returning the value 1 since only one operation was sent. The two previously mapped shared memory areas are unmapped, so the descriptor file associated with io_uring can also be released. When finished, a new process is created, immediately suspended and interrupted by the SIGCHILD signal used when a child process terminates. It then resumes the sleep phase and opens the PROT_WRITE file with fd 4.

At this point, we only have to wait for the slow write to finish, so that the interrupted write operation can resume, but this time on the /etc/passwd file replacing the old one, even though it was opened in read-only mode, since the permissions check was performed at the beginning and not when execution resumes.

Using strace, however, it is only possible to obtain basic information, if you need something more precise about what is happening, you need to turn to more specialized tools such as ftrace. This framework makes it possible to obtain a complete list of occurrences within the kernel, so that a history of the kernel functions called is displayed, with the duration attached.

The substantial disadvantage of this approach, however, is the fact that the output, due to the extreme level of detail obtained, is more complicated to interpret and it is therefore necessary to filter out calls relevant to our purpose.

Setting up ftrace is pretty simple once you have the correct kernel configuration in place:

CONFIG_FUNCTION_TRACER=y CONFIG_FUNCTION_GRAPH_TRACER=y CONFIG_STACK_TRACER=y CONFIG_DYNAMIC_FTRACE=y

The ftrace subsystem will be available under /sys/kernel/tracing and can be enabled in the following way:

# Use the function_graph tracer root@ubuntu:/sys/kernel/tracing# echo function_graph > current_tracer # Start tracing root@ubuntu:/sys/kernel/tracing# echo 1 > tracing_on # Print function trace root@ubuntu:/sys/kernel/tracing# cat trace # Stop ftrace root@ubuntu:/sys/kernel/tracing# echo 0 > tracing_on

Alternatively, we can employ the simpler function tracer and only capture the interesting functions with the following command:

# Only trace ext4 events root@ubuntu:/sys/kernel/tracing# echo ext4_* > set_ftrace_filter

# FUNCTION CALLS

# | | | |

[...]

ksys_write() {

__fdget_pos() {

__fget_light() {

__fget_files() {

rcu_read_unlock_strict();

}}}Within __x64_sys_write() which deals with write requests, the first function called is ksys_write(), which physically performs the write, taking advantage of the information obtained from fdget and fget including permissions. It is only at this stage that the target file is checked.

vfs_write() {

rw_verify_area() {

security_file_permission() {

apparmor_file_permission() {

aa_file_perm() {

rcu_read_unlock_strict();

}}}}

These checks ensure that our file was opened in write mode and can be modified. The kernel then moves on to verifying the memory area on which the writing will take place, it specifically checks the size of the input to be written to be below the maximum threshold and, if not, it gets truncated. Security checks are then delegated to the AppArmor module to verify that the user has the necessary privileges to write to the specified file path. In the end the kernel takes care of the synchronization routines to manage any other processes that are reading from the same file. Once finished, if everything is good, we move on to writing to the kernel’s virtual file system (VFS).

[...]

mutex_trylock();

__check_object_size() {

__check_object_size.part.0() {

check_stack_object();

__virt_addr_valid();

__check_heap_object();

}}

Next, we try and lock the resource, so that no other thread can access it while the write is occurring. To make sure that no thread has already locked it, several checks are made, such as its address and size, as to be certain that the object being operated on is the correct one.

__kmalloc() {

kmalloc_slab();

should_failslab();

___slab_alloc() {

get_partial_node.part.0() {

_raw_spin_lock_irqsave();

_raw_spin_unlock_irqrestore();

}}}

The kernel then executes several functions with the goal of allocating a specific memory area, using slab to improve performance and reduce memory fragmentation, and then checking that the execution of these functions is not the cause of any memory issue. Ftrace doesn’t provide any detail about the specific object being allocated, though we presume it to be a filp custom cache object.

[...]

fput() {

fput_many();

}

__x64_sys_futex() {

do_futex() {

futex_wake() {

get_futex_key();

hash_futex();

_raw_spin_lock();

mark_wake_futex() {

__anywhere_futex();

wake_q_add_safe();

}

Upon completion of the operations managed through a queue containing the work to be performed by the different cores, resources must be freed so that other processes can use them for their own needs. When a thread is done using a resource, the kernel might have to awaken all processes waiting for it via futex, so that they can continue their own execution. This is precisely the case with the awakening of the write to the /etc/passwd file after the end of the slow write.

kfree() {

obj_cgroup_uncharge() {

refill_obj_stock();

}

mod_objcg_state();

rcu_read_unlock_strict();

__slab_free();

}

kmem_cache_free() {

obj_cgroup_uncharge() {

refill_obj_stock();

}

mod_objcg_state();

rcu_read_unlock_strict();

}

While freeing used resources, the kernel must also deal with freeing the memory areas of the allocated file structs. Specifically, it must locate the slabs that have been previously allocated and make them available to other processes through various kfree calls. This process must be repeated for the allocated virtual memory and dedicated caches.

By the end of all these steps, the /etc/passwd file will have been modified, and root privileges can be obtained by logging in with the new root user.

CVE-2024-0582

The importance of io_uring over the years, due to its ability to improve the performance of systems by avoiding context switches caused by blocking system calls and replacing them with asynchronous operations, has caused it to become one of the most attractive targets for many attackers. This preference is also due to two additional reasons:

- First, during its execution, it interacts with numerous critical points of the Linux system, enticing attackers to find flaws within it to then have a better chance of succeeding in their aims

- Second, almost every application somewhat needs to perform I/O operations, providing attackers with a huge attack surface and as many different types of vectors

Among the various vulnerabilities discovered, the one in January 2024 turns out to have numerous points in common with the one I just addressed in this article.

The most important aspect is that it can be exploited through a data-only exploit, meaning that the program’s execution flow is not altered, but focuses on modifying existing data within kernel structures. The characteristics of this attack take on considerable importance in practice because they allow circumventing certain security measures implemented by all operating systems, such as control-flow integrity (CFI), which allows control flow present in the binary to be checked to ensure that it is not modified by external agents.

The main similarity between the two vulnerabilities is that they are both UAFs that focus on gaining read and write access to the various memory pages freed after registration. This will make it possible to control all objects within those pages after their reallocation.

The critical point in this case turns out to be a change made since kernel version 6.4, which allows the application to delegate the allocation of shared memory needed to register ring buffers by the kernel. This space, managed by the io_uring subsystem, will have to be mapped by the application to access it later and proceed with its normal operations covered in this article.

The problem arises when the application decides to call the ring unregister function, causing the kernel to free up allocated memory. When this happens, no checks are made to verify that an unmap of that same area has also been called in user space. If this hasn’t happened, the application will then have access to those pages, even when they are reallocated for other purposes, thus granting it the ability to modify the objects within them.

At this point, if a specific file is to be accessed, it shall be opened within one of the pages over which we have control. As we have seen, the most effective way to perform LPE is by adding a new entry to the /etc/passwd file to grant yourself access with a new user having root privileges.

To allocate the controlled file structure on one of the pages over which you have control, you can take advantage of a technique called heap spraying. This technique involves opening the target file in read-only mode numerous times, without ever closing any of them. Since the access is read-only, the operations will not need the lock, which is needed instead for writing.

Each time it is opened, the space available in the partially allocated cache will be used first, at the end of which fresh memory pages will be allocated. Upon each allocation the space available will decrease, consequently increasing the probability that a new open() will allocate the file structure within one of the pages over which you have control. When this happens, since you have full control over the objects inside, you will be able to change the flags in the file structure, thus adding the write permissions. Once this is done you can freely edit the file, adding a new user or modifying existing ones when possible.

The continuous discovery of vulnerabilities in widely spread software makes the knowledge gained from in-depth analysis of the underlying issues essential in reacting to 0-day threats, especially when, as in this case, we must quickly find a way to mitigate the risk while waiting for the patch to be released. Vendors should keep in mind that no software can be defined as truly secure and vulnerability-free since there is no way to prove it. Despite this, the contribution made by hacking contests, which continue to push researchers to compete, has always been and will continue to be one of the key resources to innovate in cybersecurity and ensure the improvement of products on the market.

This article, like the entire internship, would not have been possible without the help of Betrusted, of the tutor Marco Loregian and Davide Ornaghi, who supported me during the internship months and took care of the translation. At the same time, the writeups and open-source material consulted and cited below have been of vital importance to understand and deepen these topics.

Writeups and source code

- Linux Kernel Source Code:

- “DirtyCred Remastered: how to turn an UAF into Privilege Escalation” by LukeGix:

- “Mind the Patch Gap: Exploiting an io_uring Vulnerability in Ubuntu” by Oriol Castejón:

- “The quantum state of Linux kernel garbage collection CVE-2021-0920 (Part I)” by Xingyu Jin:

- “Welcome to Lord of the io_uring”:

Davide Ornaghi is the supervisor of this article.

Scopri come possiamo aiutarti

Troviamo insieme le soluzioni più adatte per affrontare le sfide che ogni giorno la tua impresa è chiamata ad affrontare.